Unstructured data analysis is one of the most interesting aspects of “big data”. It’s certainly impressive to be able to process massive amounts of structured data in no time, but analyzing unstructured data opens completely new possibilities, that can lead to the creation of whole new disciplines or industries. To test out HANA text analysis capabilities, I thought I would try to load my blog into a column table, and see what it can do.

Leveraging my company AWS HANA instance, I started out by making a simple single column table. The important thing to note here is that for text analytics to work, the data type to be used has to be NCLOB. BLOB for example, will not work.

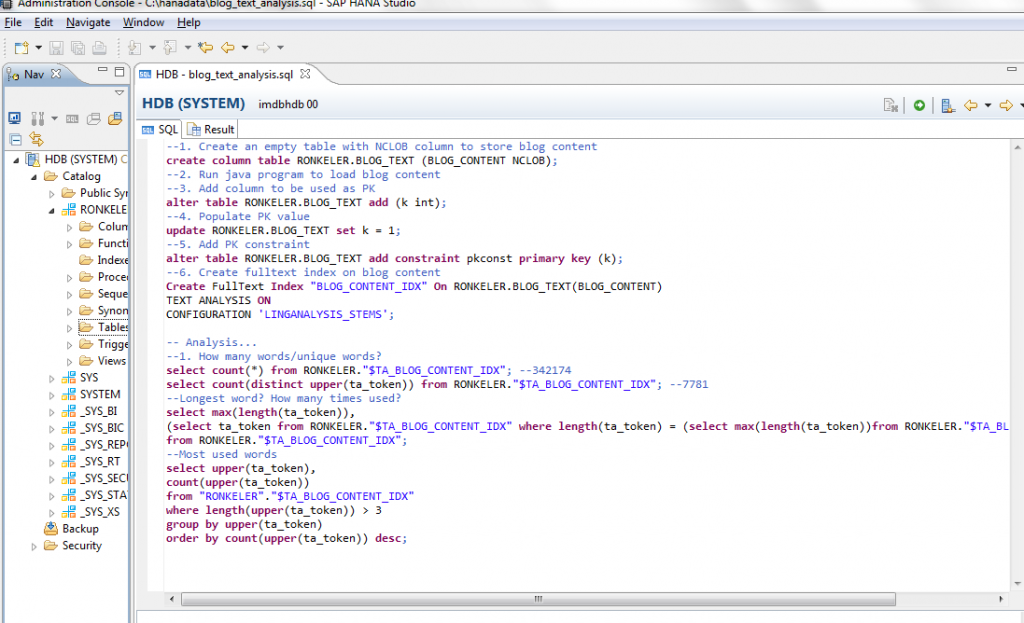

So, in HANA studio, after connecting to the HANA instance and my schema, I executed:

--1. Create an empty table with NCLOB column to store blog content create column table RONKELER.BLOG_TEXT (BLOG_CONTENT NCLOB);

The next step was a bit more interesting. How do I actually load my blog into the table…? Well. First, I had to get my blog out to a file. Since I use wordpress, that was as simple as selecting the Export option from the Tools menu of the administration section.

With my blog exported as an xml file, I set my sights on loading it into my table. Data Services would be my typical choice, as it’s fast, easy to use, and has great integration with HANA. However, to keep my options open, I looked for a programmatic solution that will allow more robust capabilities. And as it turns out, the solution was similar to loading a blob object into any other database. I ended up writing a small java program to load the file in.

To connect to HANA in java, I needed to find the ngdbc.jar library and add it to my project build path in Eclipse. The rest was pretty standard:

import java.io.*; import java.sql.*;

public class HanaConn {

public static void main(String args[]) { try { File f = new File("C:\\FOLDER\\FILE.xml"); InputStream is = new FileInputStream(f);

Class.forName("sun.jdbc.odbc.JdbcOdbcDriver");

java.sql.Connection conn = DriverManager.getConnection(

"jdbc:odbc:imdbhdb", "SYSTEM", "PWD");

conn.setAutoCommit(false);

PreparedStatement stmt = conn

.prepareStatement("INSERT INTO RONKELER.BLOG_TEXT VALUES(?)");

stmt.setBinaryStream(1, is, (int) f.length());

stmt.executeUpdate();

conn.commit();

is.close();

System.out.println("Done inserting!");

stmt.close();

conn.close();

} catch (Exception e) { System.out.println("Exception occured: " + e.getMessage()); e.printStackTrace(); } } }

So, step 2

--2. Run java program to load blog content

Next, I modified my table to add a primary key. Using the text analytics requires the analyzed table have a PK:

--3. Add column to be used as PK alter table RONKELER.BLOG_TEXT add (k int); --4. Populate PK value update RONKELER.BLOG_TEXT set k = 1; --5. Add PK constraint alter table RONKELER.BLOG_TEXT add constraint pkconst primary key (k);

So far, things have been pretty standard. The cool part was turning on the text analytics. Using one simple SQL command, HANA processed the content of my text column, and parsed it out in nano seconds!

--6. Create fulltext index on blog content Create FullText Index "BLOG_CONTENT_IDX" On RONKELER.BLOG_TEXT(BLOG_CONTENT) TEXT ANALYSIS ON CONFIGURATION 'LINGANALYSIS_STEMS';

This query generated a table called $TA_BLOG_CONTENT_IDX. This table included a row for each word in my blog, allowing me to then run some queries to perform analysis on the content of my blog..:

-- Analysis... --1. How many words/unique words? select count(*) from RONKELER."$TA_BLOG_CONTENT_IDX"; --342174 words! Wow, who knew i wrote so much... select count(distinct upper(ta_token)) from RONKELER."$TA_BLOG_CONTENT_IDX"; --7781 unique words... Maybe i need to read more to expand my vocabulary.. --Longest word? How many times used? select max(length(ta_token)), (select ta_token from RONKELER."$TA_BLOG_CONTENT_IDX" where length(ta_token) = (select max(length(ta_token))from RONKELER."$TA_BLOG_CONTENT_IDX")) from RONKELER."$TA_BLOG_CONTENT_IDX"; --54;VbZDUzY2M2ZDQtYzNmMC00OTJjLTlhMDUtNDU3MGMyY2ZkOWZm& well, not really a word, but you get the idea --Most used words select upper(ta_token), count(upper(ta_token)) from "RONKELER"."$TA_BLOG_CONTENT_IDX" where length(upper(ta_token)) > 3 group by upper(ta_token) order by count(upper(ta_token)) desc; -- Well, need to do some more with this, but Xcelsius and Webi were pretty high up on the list

Of course, this is a tiny example, but the ability to store and quick and easily parse text can be an important feature in any HANA implementation. From social media content to corporate documents, this is a game changer!