If you knew who is dominating the news yesterday, would you be able to predict who will dominate the news tomorrow…? This is what I have set out to find out, leveraging some new data technologies available to us today…

The big data revolution continues to quietly but surely change the course of our evolution. It’s changing how we behave, how we interact, and how we think. And it’s not because the concepts of big data are new or unprecedented, it’s because it is now possible to do things that technologists, entrepreneurs, scientists and analysts could only dream of a few short decades ago. It’s not that the ideas of harnessing information for decision making or predictions is anything new, it’s that today, it is possible, accessible and financially feasible to perform the levels of calculations and computation needed to accomplish this.

SAP HANA is a great case in point, and I have been working with some of my Colleagues at Cleartelligence on an interesting use case to demonstrate the accessibility and feasibility of big data concepts: predicting the news.

This is an especially exciting topic and time for me, as I can put together several of my passions and skills in new ways. Combining my IT degree and background, my psychology degree and social studies interests (high school major..) in ways I could never imagine before…

I started with a hypotheses, or a premise. And the premise is this: can the news, as reported in popular media channels, be predicted? My hypothesis is that yes, to a degree. If we could amass enough data, we could look for patterns or news categories, and see if we can identify seasonality in news categories. For example, can we correlate seasonality with certain types of news? Are there any sentiments patterns we can use to predict tomorrow’s news?

Just several years ago, being able to test out such a concept was completely unfeasible for anyone but a few individuals who had access to the world’s most expensive computing equipment. Today, we can tackle such a project with commodity hardware and enterprise software available to any organization.

So, the first step in the project is to collect data. Of course, the more data we gather, the better our analysis can become, and more accurate. And this is one of the key reasons why big data is becoming so pervasive today, the technology that allows us to collect sufficient amounts of data and process them in an efficient and economic manner, to produce high quality results.

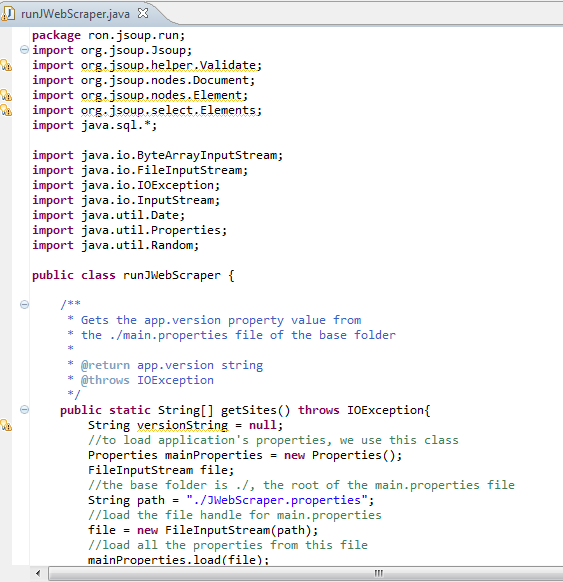

To collect my data, I wrote a small java program that crawls several of the top news web sites, and scrapes their front page into our HANA database. This program runs nightly, and as such, each day, our news database grows by several multitudes, as the number of web sites scraped.

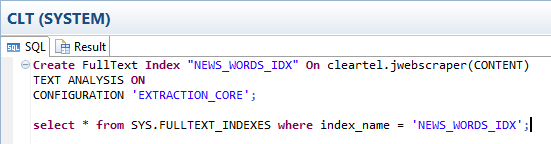

Next, I used HANA text analysis functions to index the web sites data, which is stored as BLOB. HANA can automagically process free form text using the text analysis functions and has several configuration options to extract meaning from the unstructured BLOBS. Some of the options include LINGANALYSIS_BASIC, LINGANALYSIS_FULL or EXTRACTION_CORE. Each processing option provides different capabilities from parsing individual words, to using complex linguistic analysis and pattern matching to retrieve specific information about customers’ needs and perceptions.

The EXTRACTION_CORE option proved extremely insightful as it not only extracted meaning out of the BLOB, it also categorized it into some pre-defined categories, easy to use and simple.

This data gathering program has only been running for a short period, and I plan to continue updating this topic with additional insights, data visualization techniques and examples of interesting usage of this technology as more data is gathered.